Building a Kubernetes Blueprint for vRealize Automation - Intro

Docker Training

For the first two days of DockerCon, I opted for the Docker Fundamentals training as an add-on. I’m glad I did because the hands-on exercises provided some great experience and taught me several new things. It was nice to have a controlled introduction to Docker, Swarm, and Kubernetes. The VMs used for the training were pre-configured with all the necessary tools so no time was wasted there. So when it came time to set up my own “test” environment, I wasn’t certain as to what needed to be installed. There were a few folks in the class that had pre-registered for the exam before they had even completed the training, needless to say, they were under-prepared by the fundamentals level training and their own hands-on experience and failed. I’m glad I anticipated as much and didn’t bother with the certification exam!

My Goal

My initial goal for this blueprint was to provide a simple to consume single-master, multi-node blueprint that offered a choice between Flannel and Calico for the networking plug-in so that I could re-create some of the hands-on exercises I did in the Docker Fundamentals training - in particular, using a NodePort so that any/all nodes in the cluster would provide content on the same port across all nodes, regardless of which node was actually running the services that provided the content! Sounds simple enough…

Existing Blueprint

In order to save myself some time, I did a bit of Googling and checked the VMware Sample Exchange and came across an old post from Ryan Kelly: The Kubernetes Blueprint for vRA7 is here! and I didn’t even see this post by Mark Brookfield Deploying Kubernetes with vRealize Automation until I was done with my initial work. Each of those articles took different approaches from what I did.

These existing efforts are good starts, and I like what Mark did with the Dashboard bit, but neither of them fit my needs so I opted to build from the ground up with Cent OS 7 as my base.

Challenges

I feel like I’m a typical IT person - I try to get things up and running quickly with minimal reliance on “Documentation” … It helps to gauge how intuitive and admin-friendly the stuff is. Sometimes this works rather well, other times, it causes me unnecessary heartache. Case in point: Networking for Kubernetes. When you “roll your own” Kubernetes cluster, you need to choose one of the many different networking/policy plug-ins, initialize your cluster, and apply the plug-in. Well, each plug-in has guidance on the pre-defined/preferred Pod Network CIDR that should be specified when initializing the Kubernetes cluster.

For example, Calico prefers 192.168.0.0/16 while Flannel and some others document 10.244.0.0/16, and others simply specify that you should be sure to include the –pod-network-cidr= switch when running kubeadm init. Since I didn’t read the docs, I simply initialized my cluster, searched around a bit, plugged in one of the options, and tested a few deployments/pods in the cluster. At first appearance, things look ok, but when I applied a NodePort to the deployment, I got inconsistent results: either one or none of the nodes would serve up the desired content on!

Results

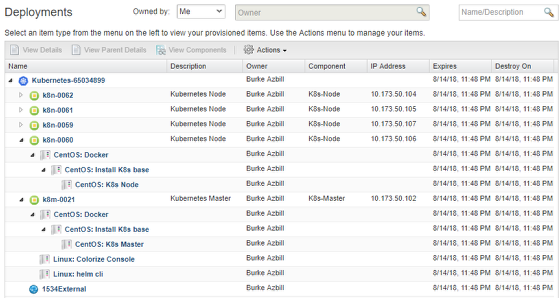

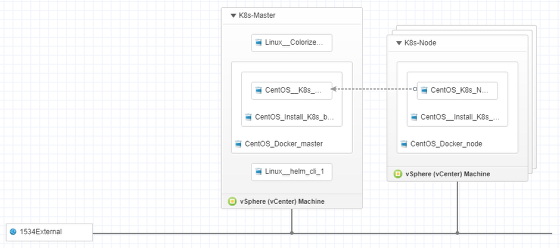

Blueprint Preview

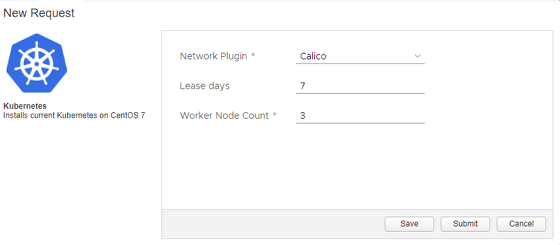

Request Form

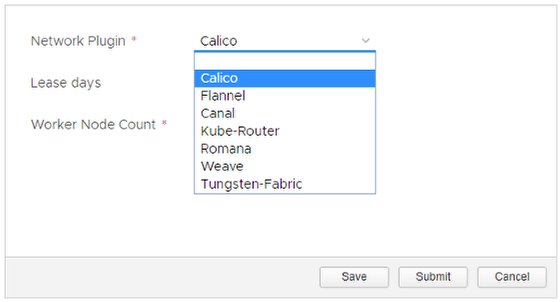

Network Plugin Options

The drop-down is simple text, nothing special or dynamic here. The software component for the K8s Master takes this string as an input, then pulls and applies the appropriate yaml to the cluster.

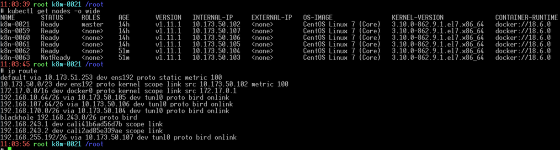

Nodes in the cluster and cluster routing between nodes ready

Based on the earlier text about my challenges, you can see in this screenshot that I chose “Calico” for this deployment because of all the routes to 192.168.x.x networks for each node in the cluster. Scaling out the nodes in vRA will result in the new node(s) being automatically added to the cluster and the additional routes created.

Where to find the blueprint

The blueprint has been released on VMware Code’s Sample Exchange under vRA Blueprints. Visit Sample Exchange